Hey everyone! 👋 Let’s talk about building things – not just features, but the kind of tech that reshapes how a product feels and functions.

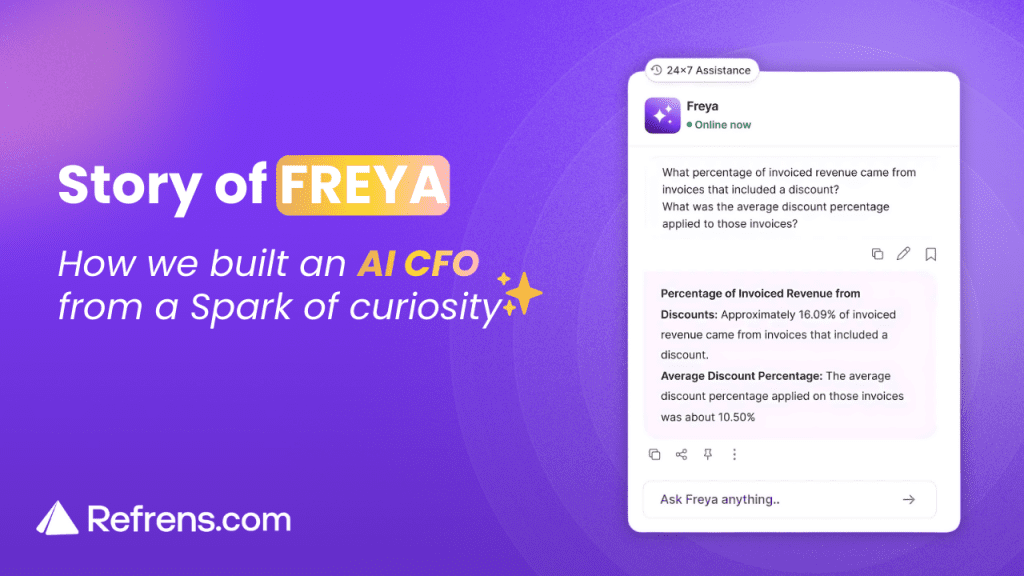

This is the story of Freya, the AI Accounting Assistant we built for our users at Refrens. It’s a tale of late-night coding, hitting technical brick walls, and that “aha!” moment when it all just works.

Grab your coffee, and let’s get into the weeds!

Part 1: The Rogue POC and the Hype Train 🚂

It all started back in September 2023. My days were a classic software engineering montage: “Optimize this,” “Refactor that,” and “Fix the bug that the refactor caused.” You know the drill. I was itching for something new, something that would stretch my skills beyond the familiar. Generative AI was the talk of the town, and I wanted a front-row seat on the hype train. The ticket price? Build a legit Proof-of-Concept.

At Refrens, we have a culture of “fail fast, learn faster.” So, I spun up a new private repo, called it ‘Sentient’ (a tad optimistic, I know 😂), and got to work. My goal was simple: build a chat engine that could intelligently answer user questions about our platform. No more scrolling through help docs to figure out how to bulk-upload invoices.

The Tech Stack of an Experiment:

- Backend: Python with FastAPI. The choice was obvious. Python is the lingua franca of AI/ML. All the cutting-edge libraries, from transformers to torch, get their first-class bindings there.

- Vector DB: Neo4j. This was a strategic gamble. It’s primarily a graph database but had just rolled out vector search. My thinking was, “Why add another single-purpose provider like Pinecone when we could potentially leverage graph-based RAG to connect disparate information nodes in the future?” It felt more scalable from day one.

- LLM Framework: LangChain. It was the open-source darling of the moment, providing a high-level abstraction over LLM provider APIs. This let me focus on the core logic—chunking strategies for our docs, choosing an embedding model, and perfecting the retrieval prompt—rather than getting bogged down in boilerplate.

The initial POC was a simple RAG (Retrieval-Augmented Generation) system. I fed our help articles into a text splitter, generated embeddings, and stored them in Neo4j. The first time I asked a question and saw the LLM craft an answer from our data—something it had never seen during its training—was pure magic. ✨ It felt like the possibilities were endless.

I demoed it to the team and our founders. They were blown away. But, as it often happens in a growing startup, we didn’t have the bandwidth to go all-in just yet. So, ‘Sentient’ went into hibernation, and I went back to my regular programming.

Part 2: The Great AI Awakening (and the Great Refactor) ☀️

Fast forward to April 2025. The product had grown, the team was bigger, and the timing was finally right. Our CTO, Mohit, gave me the green light: “It’s time. We go all in with AI.” 🚀

But the game had changed. The POC was great for a solo mission, but not for production.

The New Mandate:

- TypeScript or Bust: Our entire ecosystem is built on TypeScript and Node.js. A rogue Python server would be an operational silo and a maintenance nightmare. The POC had to be rebuilt from the ground up for consistency and team velocity.

- Go Beyond RAG: Answering help docs is cool, but the real value was letting users talk to their own data. Freya needed to become an AI CFO, capable of querying their invoices, expenses, and leads.

- Seamless UI: It had to feel native, tightly integrated into our React and React Native apps.

So, the refactor began. I chose Fastify for our new TypeScript backend. Its schema-based serialization offers incredible performance, and its plugin-based architecture was perfect for building a secure, robust microservice. To manage the complex, multi-step flows we envisioned, I opted for LangGraph. If LangChain gives you the LEGO bricks, LangGraph provides the blueprint and the state machine. It allows you to define agents as nodes in a graph and control the flow with conditional edges, preventing the chaotic, unpredictable behavior of early agent frameworks.

Here’s a pro-tip for anyone doing a major refactor: use AI to help you. I fed my old Python code and the new requirements into Gemini, which laid out a detailed migration plan. Then, I used VS Code Copilot to translate the logic. It was like having a team of junior engineers working with me. The result? A production-ready TypeScript server—complete with JWT authentication proxied from our main backend, rate limiting, and schema-based input validation—was up and running in days, not weeks.

Part 3: The MongoDB Heist: Teaching an LLM to Speak NoSQL 🕵️

This was the boss battle. How do you let an LLM securely query a user’s data in MongoDB? SQL databases have clear schemas, but Mongo is famously flexible. There were no clear guides for this.

My first step was to see if LLMs even understood MongoDB aggregation pipelines. I went to a playground, gave GPT-4.1-mini a few prompts, and was shocked. It did a surprisingly good job of generating valid queries with zero context. Okay, challenge accepted.

The solution was all about meticulous context and prompt engineering.

- The Schema as a Blueprint: I created a minimal TypeBox schema from our database models. This was a “schema-for-LLMs,” a detailed, human-readable blueprint with descriptions for every field, explaining its purpose and data type. This is crucial for denormalized NoSQL data.

- Learning by Example (Few-Shot Prompting): I wrote a handful of curated examples of common user queries and the exact MongoDB aggregation pipeline for each. This gave the LLM a “feel” for our data structure and query patterns.

- Explicit Education: I wrote detailed instructions in the system prompt about our business logic. For example: “For businesses in India, tax is split into CGST and SGST. Other countries would use ‘X’ tax field.”

- Security First (The Hard Part): This was non-negotiable.

Things were looking good… until we hit the date problem.

Part 4: The Date Dilemma: A Relatively Huge Problem 🗓️

LLMs are notoriously bad with dates. They are stateless text predictors, lacking a real-time clock or a true understanding of temporal relativity. Worse, MongoDB queries require a proper ISODate object, not just a string like “yesterday.” The queries were failing left and right.

After much brainstorming, we landed on a solution that was both simple and incredibly robust. Instead of asking the LLM to figure out dates, we told it to use a placeholder.

We instructed the LLM to generate dates in a specific, structured format:

- For specific dates: [[DATE:2023-01-15]]

- For relative dates: [[RELATIVE:last 6 hours]]

- For single date objects (needed for calculations): [[RELATIVE:today:SINGLE]]

This was a game-changer. The LLM’s job was now easy: just insert the right placeholder. Our backend could then use a simple RegEx to find these placeholders, parse the expression using date-fns, and replace them with precise, timezone-aware Date objects. We had decoupled the LLM’s fuzzy language understanding from our application’s deterministic logical execution.

Part 5: From Raw Data to Real Insights 🗣️

Okay, so we could generate a query, run it securely, and get back a JSON object from MongoDB. Awesome. But showing a user a raw JSON response is a terrible experience. We needed another agent in the pipeline.

I created the Natural Language Response Agent. Its job was to take the raw, structured data from the MongoDB query, along with the original user question, and translate it into a beautiful, human-readable markdown response.

This agent was also given context about the database schema so it could understand what fields actually meant and how they were used. It was prompted to identify the key insights from the data and present them clearly, often using tables for comparisons or bullet points for lists. This step was the difference between a data-dump and a genuine “AI CFO” experience.

Part 6: From Solo Agent to an AI Symphony 🎶

As users started interacting with Freya, we noticed a new pattern: they weren’t asking one question at a time. They were asking things like:

“Show me my top 5 clients by revenue, what was their payment history, and take me to the latest invoice for the top client.”

Our initial single-intent system would get confused. We needed to evolve. We re-architected Freya into a multi-agent system inspired by the “supervisor” pattern.

Here’s the new flow:

- Query Decomposer: The user’s prompt first goes to a decomposer agent. This is an LLM call with a prompt engineered to break down complex requests and output a JSON array of sub-queries, each with an id, intent, and dependencies.

- Supervisor Agent: This agent is the orchestra conductor, implemented as the main loop in our LangGraph flow. It maintains the state of all sub-queries and executes them in the correct order based on the dependency graph.

- Tool-Using Agents: The supervisor passes each sub-query to the right specialized agent:

- Result Aggregator: Once all sub-queries are done, this agent gathers all the results and crafts a single, coherent, human-readable response using the Natural Language Response model.

This architecture transformed Freya from a simple tool into a true reasoning engine capable of handling complex, multi-intent financial analysis.

Part 7: The Final Polish: Voice, UI, and the Horizon 🌅

With the core logic in place, we focused on the user experience. I resurrected an old chat UI component from a past project called “Refrens Pulse,” refactored it for Freya, and got it into our web and React Native apps.

Then, we gave Freya a voice. We integrated the Web Speech API on the client-side for real-time transcription and added a Text-to-Speech agent on the backend. The final touch was a “speech pre-processor” agent that converts structured data (like markdown tables) into a more natural, conversational format. Now, you can actually talk to your AI CFO.

The journey of building Freya has been one of the most challenging and rewarding experiences of my career. It started as a curious experiment and has grown into an integral part of the Refrens platform. It’s a testament to what’s possible when you’re given the freedom to explore and the drive to solve hard problems.

What’s next? We’re already expanding our Gen AI capabilities into Document Intelligence for smarter invoice scanning and Lead Enrichment to give our users superhuman sales insights.

The story of Freya isn’t over. In fact, it’s just beginning.

– Nitish Devadiga (Senior Software Engineer, Refrens.com)